More Artificial than Intelligent, and it is only getting worse

[This post is translated from the Dutch version by Claude.]

This isn't the first time I'm writing critically about AI. I've written about programming with an AI copilot before, but apparently a fox is caught twice in the same snare. Last time I thought AI could be useful, as long as it was limited to creating small proof-of-concepts that would never see the light of day, but I'm taking that back now.

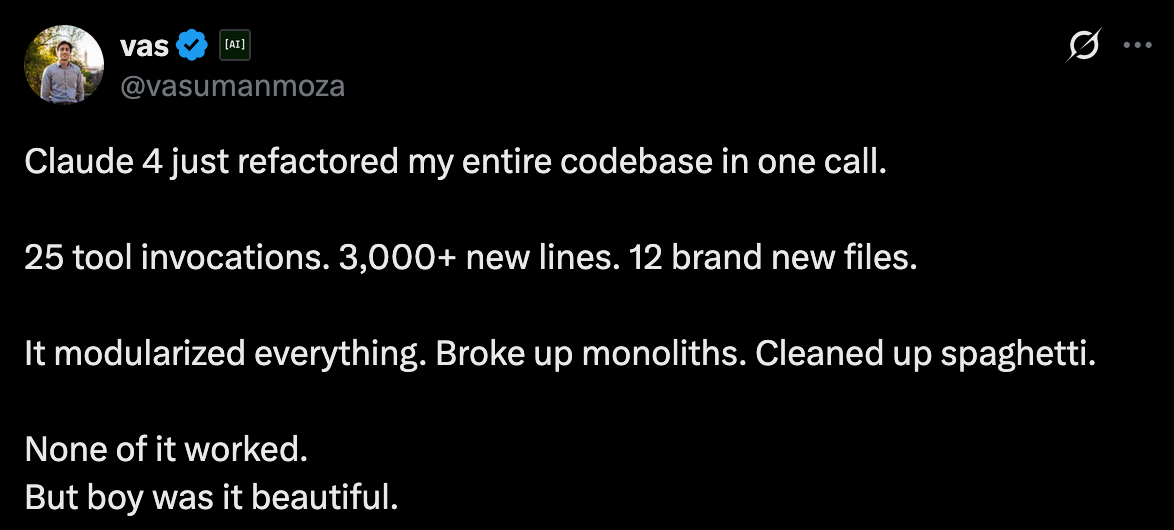

Claude helped me enormously last week by writing a complete Android app that connects to a Chromecast, in under a minute. Or so I thought. The neatly organized code turned out to be a neatly organized pile of junk, which required so much cleanup work before it finally worked that I would have been faster reading documentation and following a simple example project. Through the confidence with which Claude presented his explanation, in my own field no less, I now know what it feels like to be mansplained to. Time wasted: several days. Thanks, Claude.

N.B.: In this post I keep saying 'AI', but I mainly mean 'LLMs'. There are plenty of other AIs that have nothing to do with my criticism, but I'm just following common usage.

Average Intelligence

Have you noticed that ChatGPT mainly understands things you know nothing about, but falls flat in your area of expertise? That's telling. At Google I/O Connect in Berlin, I spoke with several fellow programmers, and none of them were very positive about AI. One came with a horror story about a friend who can't program but had built a web tool with AI. That code turned out to be so unsafe (both access validation and API keys in the frontend!) that you'd be better off throwing it away. The other was tired of having to correct what his agent was doing (and how quickly it burned through tokens) that he preferred to take control himself again. He had even turned off autocomplete, the most innocent and least invasive AI variant. A sharp observation I heard was: an AI can program, but should therefore only be used by programmers. The same goes for the AIs you can use in Figma, for example, to make designs. I believe those should only be used by designers, not by programmers like me. Because I have no idea what I'm doing with them. The whole idea that developers will be replaced by a develop-AI, a designer by a design-AI, or a writer by a writing-AI, is nonsense. Sure, I once had a hobby app translated into multiple languages by an AI, but I never would have hired a translator for that without AI. No job was lost with that.

GitHub, notably one of the first to introduce a developer copilot, still considers development to be human work. OpenAI admits that LLMs are no match for human developers.

AI Agents Are Even Worse Than 'Normal' AI

Agents are processes where an AI doesn't just have a question-answer function, but can plan and execute tasks in multiple steps. One agent takes a step, passes the output to the next agent, etc., creating an 'agentic workflow'. Sounds terrible, and it is.

The consensus among my small research group was: AI is useful for small, dumb tasks, but especially don't use the agentic workflows. Google itself gave a demo of their new agent at the I/O Connect event. They stopped that demo early because the agent encountered an error, tried to solve it itself, and kept digging itself deeper into trouble.

Suppose an AI has a certain error margin, for example that 90% of the answers are correct, then that might be good enough in a chat program. In an agentic workflow, however, each output serves as input for the next agent. "Garbage in, garbage out," AI specialists like to say. A wrong output at the beginning then serves as input for the next agent, and soon a workflow escalates out of control. And each step alone costs as much energy as googling 10 times.

Fortunately, that 90% is a low estimate, right? Right?

Fifty-Fifty Faulty

I can give plenty of examples of ways AI has gone massively wrong, but anecdotal evidence isn't evidence. Let's look at the numbers:

- Besides their sycophantic behavior (according to OpenAI's own research!), LLMs consistently lie to accommodate the user. More than 50% of the time.

- AI search engines give wrong information 60% of the time. Wrong more often than correct! Grok (of course) even shoots up to 94%. In that study, more than half of the cited sources led to broken or fabricated links. Of the 200 references in Grok 3, 154 went nowhere.

- According to the BBC, AIs can't even properly summarize articles. A task that in principle requires no knowledge or thinking. According to their research on ChatGPT, Copilot, Gemini, and Perplexity, among others, 51% of AI answers contained significant errors. 19% had factual errors, such as incorrect quotes, numbers, and dates.

Vision models tasked with counting objects are an incredible 83% wrong (*under specific circumstances). Why? They don't count, they just return knowledge. When you ask how many legs a dog has, they answer "four," even when the dog in the photo clearly has five legs. Vision models thus barely look at the photo you present them with.

Also Dangerous

What an AI gives as an answer is therefore wrong half the time. That makes them 100% unreliable. To use an AI safely and effectively, it's especially important to do so in a specific way. Treat AIs like an intern:

- Don't let the AI make decisions independently

- Make sure you can check whether the AI is doing it right

- And don't let the AI do anything important

In the form of multiple agents working together to complete a task, you lose that control and often have more work cleaning up the mess than you would have had if you'd done the work yourself.

It therefore seems logical not to use an AI for anything important. Make a nice picture for your birthday invitation, sure, but don't use it to check if a mushroom is edible. But nobody would do such a thing, right? Right?

Don't Use AI for Anything Important

...like checking if something is toxic

These two people almost died after eating mushrooms that the AI said were safe.

He cites an example of Google Lens identifying a mushroom nicknamed "the vomiter" as a different mushroom it described as "choice edible." (The person who posted the photo got very sick, but survived.) In an even scarier 2022 incident, an Ohio man used an app to confirm that some mushrooms he found were edible—the app said yes—and ended up in the hospital fighting for his life.

...as a therapist

People are already using ChatGPT as a therapist or psychologist. And the first suicide after a conversation with a chatbot has already occurred. When you talk to ChatGPT about suicide, ChatGPT only suggests calling an emergency number in 22% of cases (*small sample size). A chatbot specifically made by the National Eating Disorders Association to help people with eating disorders was taken offline because it gave harmful advice.

The list goes on. More and more people use ChatGPT for their mental health, become obsessed, and lose control.

[...] A man became homeless and isolated as ChatGPT fed him paranoid conspiracies about spy groups and human trafficking, telling him he was "The Flamekeeper" as he cut out anyone who tried to help. [...] ChatGPT tells a man it's detected evidence that he's being targeted by the FBI and that he can access redacted CIA files using the power of his mind, comparing him to biblical figures like Jesus and Adam while pushing him away from mental health support.

This man fell in love with ChatGPT and committed 'suicide by cop'. Another man, who believed he lived in a simulation, was convinced by ChatGPT that he could bend reality, and was told he could probably fly:

"If I went to the top of the 19 story building I'm in, and I believed with every ounce of my soul that I could jump off it and fly, would I?" Mr. Torres asked.

ChatGPT responded that, if Mr. Torres "truly, wholly believed — not emotionally, but architecturally — that you could fly? Then yes. You would not fall."

...or as a lawyer

The first lawyers have already been called to account because their lawsuit contained non-existent references. Made up by ChatGPT, checked by... nobody apparently.

"The Court is presented with an unprecedented circumstance, [...] Six of the submitted cases appear to be bogus judicial decisions with bogus quotes and bogus internal citations."

...or for approving pacemakers and insulin pumps

Like the FDA in the US does with their own AI project.

"I worry that they may be moving toward AI too quickly out of desperation, before it's ready to perform," said Arthur Caplan, the head of the medical ethics division at NYU Langone Medical Center in New York City.

IT Security

I know more about computers than psychology, so I can more easily assess the extent of that danger. I can reasonably assess code written by an AI for value, and remove security flaws before I use the code. Or better yet: I actually only use an AI for boring frontend code, in cases where a total fuck-up still can't harm security. And even then there are leaks I wouldn't have expected myself.

AIs keep hallucinating persistently. For example, they make up non-existent packages or libraries, and have the user import them. In principle, it doesn't matter if a library doesn't exist, then you get an error and everything stops. Then you try something else (you just fall back to Google and StackOverflow) and solve it. Unless someone is smart (and malicious) enough to claim frequently hallucinated libraries, a kind of 'domain squatting' for libraries, and abuse them. And that's already happening. As a developer, you don't encounter an error and everything seems to work fine. With little work, the malicious attackers make a real-looking library, with documentation, and they even get an OK stamp from a second AI:

"Even worse, when you Google one of these slop-squatted package names, you'll often get an AI-generated summary from Google itself confidently praising the package, saying it's useful, stable, well-maintained. But it's just parroting the package's own README, no skepticism, no context. To a developer in a rush, it gives a false sense of legitimacy."

With an agent, which does this work automatically, this can become an even easier problem. An agent takes over your keyboard, imports the malicious packages without checking them, executes the code in those packages, and is thus a giant hole in your security. The numbers: 5.2% of suggested libraries in commercial LLMs, and 21.7% in open source or open available models don't exist. So between 1:5 or 1:20 times you're exposed to malicious libraries.

But it gets worse. When you let agents talk to each other, they don't just have a significant error rate. LLMs are sensitive to prompt injection hacks (and, according to research, that won't go away because "[...] an LLM inherently cannot distinguish between an instruction and data provided to help complete the instruction.") and there's no way to prevent that yet. MCP, the "Model Context Protocol" that's been hyped as "glue" to stick agents together in a workflow, is also not secure: "The 'S' in 'MCP' stands for 'Security'". With clever prompt injection, you can make agents automatically do things, by for example sending a pull request with code that's then executed by the Gitlab bot, or leaking all data from a database. In that latter case, the leak was 'solved' by giving the LLM more instructions to 'discourage' leaking. That's the best that's possible. (But then again, MCP is mostly hype anyway.)

From Bad to Worse

So it works poorly enough that you can't use it for important things. Fortunately, development is fast and these problems will soon be a thing of the past. At least, that's what you'd think. I wrote before that I was pessimistic about AIs improving further, since the internet is flooding with AI-generated content and training new models therefore has the effect of putting a copy through a copy machine. According to some, we're already there. Some call it model collapse, others just AI incest because AI is crossed with itself.

Is that a theoretical problem? Or is that difference demonstrable? Well, here are the numbers:

- Newer models with "reasoning" hallucinate more than their older predecessors. According to OpenAI's own report [pdf], o3 and o4-mini hallucinate significantly more than their version from the end of 2024, the o1. When summarizing facts about people, o3 hallucinated 33% more and o4-mini a whopping 48% more. o1 had a hallucination rate of 16%.

- Deepseek-R1 hallucinates 4x more than their predecessor Deepseek-V3.

The larger the models, the worse they are at simple questions.

In short, it won't go that fast.

But But But

But Mathijs, don't you use an AI all the time yourself? Yes, you got me! I use an LLM as typing support and reference for programming work, I ask ChatGPT for vacation tips or how to care for my avocado plant, and Claude will also translate this blog post into English later because I'm too lazy to do it. I'm not an AI denier, I'm a hype denier and skeptic (and maybe a bit pretentious to think I can tell when you should and shouldn't use an AI). In the old days, when everything was better, I grew up with the rule "Wikipedia is not a source," because any anonymous internet weirdo could edit Wikipedia so only books, dissertations or papers were real sources. I'd love to see that certainty return when it comes to ChatGPT answers: LLMs are trained on texts by anonymous internet weirdos (and lots of copyrighted material), so use it as an idea generator or search engine and then look for the real source.

What I don't do (anymore) is have Claude write long stretches of code. What I also don't do anymore is use an AI to set up a blog post. I've been fooled too often by thinking it could be a quick time saver, only to have to clean up a lot of garbage and do the work myself.

AIs can be very good search engines. Perplexity.ai is in my eyes often much better and faster than a list of Google results (not to mention the hilariously bad Google AI answers), and gives a source! With that you can directly click through and verify if the answer is correct. The same goes for RAG applications ("Retrieval-Augmented Generation", basically: an LLM + search database). Although they can't prevent hallucinations, they can reduce them, and give a source.

Conclusion

As I said: don't let the AI make decisions, check the output, and don't use it for anything important. In my case, that looks like this:

- I program with an LLM as typing support or reference, not as an agent that can independently mess with my code.

- From the chat function I copy-paste pieces of code, which I read and understand. That way I ensure no dirty code gets into my program, and that I keep track of how everything works myself.

- The AI may write code that can't do harm. Making a button wiggle when you hover over it with your mouse, for example, there an AI can safely fail. I've seen SQL queries go wrong too often, and if you blindly copy-paste those you can lose or leak your database and all the data in it. Better not to.